ethics

The University of Pennsylvania Institutional Review Board (Section 8: Social and Behavioral Research) approved this study and waived formal consent because the risks of participation were determined to be minimal. All methods were performed in accordance with relevant guidelines and regulations.

Research design and sample

Using a national sample, we conducted a randomized controlled trial embedded in a larger research study described elsewhere and preregistered at ClinicalTrials.gov (NCT04747327). We recruited our online sample through Prolific (www.prolific.com), a research company developed by behavioral scientists.

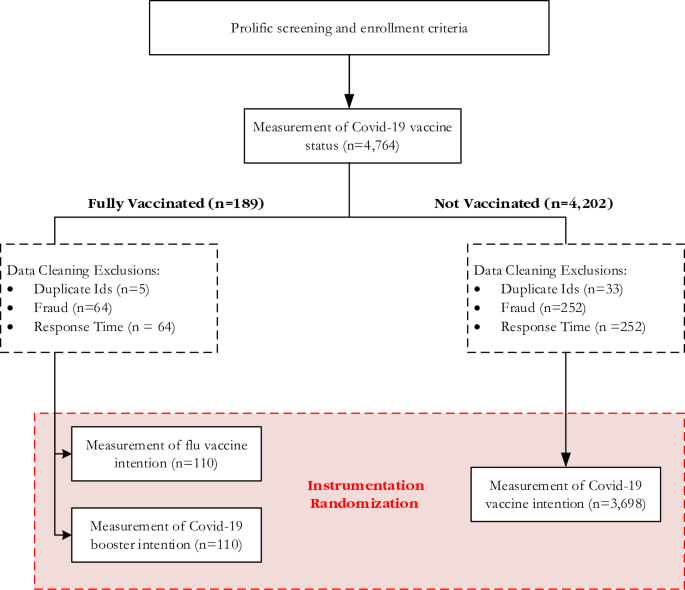

We used demographic filters provided by Prolific to limit enrollment to adults (18 years and older) residing in the United States. Prolific also includes filters for lifestyle and health behavior screening, one of which measures COVID-19 vaccine status. For the purpose of a large-scale research study, we selected people who had not been vaccinated against COVID-19. Prolific conducted a screening several months before our experiment and re-measured the status of the COVID-19 vaccine with a survey questionnaire. At this time, people who reported having received a COVID-19 vaccine were not excluded. Next, the study enrolled a group of people who received a COVID-19 vaccine and a group who did not.

The specific vaccines covered will depend on the participant’s vaccination history

Among those who have received the COVID-19 vaccine, we surveyed their intentions to receive the COVID-19 booster vaccine and the influenza vaccine. Those who did not receive the COVID-19 vaccine were only asked about their intention to get vaccinated. Participants were not asked about their intention to receive the influenza vaccine.

A group of respondents who have not received a COVID-19 vaccine also participated in a separate randomized test comparing the effects of vaccine mandates and incentives, and the results are detailed elsewhere6. To summarize, in another test, participants were randomly assigned to imagine a hypothetical scenario or not. The presence and type of scenarios were randomly assigned to ensure that the current study’s estimates of measured effects were not biased. (For example, some were assigned to imagine a hypothetical vaccine mandate policy).

Measuring vaccination intentions

Study results in people fully vaccinated against COVID-19 were based on answers to the following questions:

If the annual flu vaccine becomes available in the next four weeks, would you want to get vaccinated?

If a booster shot of the coronavirus disease (COVID-19) vaccine becomes available in the next four weeks, would you be willing to get it?

Those who have not been vaccinated against COVID-19 were not asked the above questions. Instead, this group’s findings were based on responses to:

COVID-19 shots are available. Do you want to get vaccinated against COVID-19 within the next four weeks?

The above item specifies the period of future action (i.e. vaccination within 4 weeks). This is because measuring behavioral intentions increases the validity and reliability of doing so7. However, measures of intention are not assumed to perfectly predict future behavior3. Attempts to vaccinate people within a certain time frame may not always be successful if they encounter logistical problems or other barriers beyond their control3.

We also recognize that people who do not wish to be vaccinated within the specified four-week period may have a variety of reasons, including not being eligible to be vaccinated within this period. Even if the measure calls for a longer period, some people could still become ineligible for vaccination. (For example, some people may have previously experienced an allergic reaction that precludes vaccination, even for an extended period of time.) Fortunately, using random assignment, regardless of the specified time period, This will help ensure that people eligible for vaccination are evenly distributed across all regions. Study the arm.

Vaccine distribution schedule and recommendations

At the time of our study, vaccines against COVID-19 and influenza were officially recommended for adults. COVID-19 vaccines have been available for about 10 months, and the first COVID-19 vaccine booster vaccines are expected to be available within weeks, which means It was expected to be around the same time as the influenza vaccine. Distribution of the coronavirus disease (COVID-19) vaccine began in December 2020, with limited availability initially. This study was conducted in September 2021, when access to COVID-19 vaccines was significantly expanded nationwide.

Standard socio-demographic measurements

To characterize all participants, we also measured current age, gender, race, education level, level of financial stress, and political affiliation.

statistical analysis

Qualtrics, a web-based software, was used to host the online experiment, automate randomization, and collect data, and Stata 16 statistical software was used for analysis. The primary analysis goal was to test the effect of including an “unknown” response option compared to a dichotomous response set. The following analyzes used Stata, available from StataCorp (https://www.stata.com/). The software version used was Stata18-MP.

In the primary analysis, we constructed a regression model using two constructs: a binary dependent variable (Vi) and this outcome variable. First, the variable is defined as a “yes” outcome variable, scored as 1 if a given respondent said they would like to receive the vaccine, and Vi = 0 otherwise. We also considered the specification of a “no” outcome dependent variable. Here, the dependent variable is equal to 1 if the respondent indicates that they do not wish to be vaccinated. This variable is given a value of 0 if the respondent wanted to get the vaccine or was not confident about getting the vaccine.

Finally, we estimated the relative uncertainty in decisions across vaccine selection by comparing the effect size of the “unknown” variable and the definitive response (“yes” or “no”). To do this, we reorganized the data and “stacked” the binary “yes” and “no” outcome data to simultaneously assess the net change in outcome associated with the measurement effect. This is similar to determining the absolute value of change). The new variable takes the value “1” if the respondent gives a definitive answer (i.e., “yes” or “no”) to the question of intention to vaccinate; (i.e., “I don’t know”) equals “0”. ). We move away from a definitive answer and say “I don’t know” with either an affirmative answer (“Yes, I intend to get the vaccine”) or a negative answer (“No, I do not intend to get it”). reported the net change associated with including the option. Please get vaccinated).

To examine potential order effects, we included an indicator for whether the respondent viewed the answer choice set in which the “No” answer choice was presented first (denoted as “NoFirst”); We’ve included an indicator to indicate whether an “I don’t know” option is included. (written as “unknown”). Regression is formally specified as:

$${V}_{i}=\alpha +{\beta }_{NoFirst} NoFirs{t}_{i}+{\beta }_{Unsure} Unsur{e}_{i}+{Z} _{i}+{e}_{i}$$

(1)

The coefficients βNoFirst and βUnsure measured the corresponding measurement effects. For respondents who did not receive the COVID-19 vaccine, we included them in Equation 1. (1) Collectively, indicates whether the respondent viewed one of 10 randomized hypothetical scenarios (5 as summarized above and detailed elsewhere); An additional vector of dummy variables called Zi. The instrumentation effects NoFirst and Unsure are interpreted in relation to a dichotomous “yes – no” response option set. The variance-covariance matrix is estimated using white heteroskedasticity robust standard errors.

Data cleaning and sample size

When cleaning the data, we removed duplicate identification numbers or data with a fraud score greater than 0. Fraud scores and identification numbers are assigned by Qualtrics. A fraud score is a number that indicates the risk level of fraud for research participants. Fraud scores allow research studies to identify and remove data created by bots that take surveys en masse or by people who participate multiple times on someone else’s behalf.

At this time, it is unclear whether survey data quality can be improved by excluding surveys with unusually fast completion times. Some previous research suggests that instrumentation effects may be stronger for respondents with the fastest completion times27,28. Others argue that speeding respondents simply add random noise to the data, which either does not change the results or only slightly weakens the correlation (31, 32). Analyzes were conducted excluding data from respondents who were unlikely to receive attention. In the latter case, the fastest 5% of respondents were excluded. This is a recommendation based on respondents’ completion times being more than 1.5 standard deviations below the mean. (31, 32). Screened data are presented in tables, figures, and discussion. I also ran the model using the full sample and the results did not change meaningfully.